Blast from the past

Classic network IP allow lists were probably catching on when these songs were popular:

Decades later, the internet appears to still be up in arms about this classic rocky concept:

If applying IP allow lists to the cloud excites you as much Another One Bites the Dust on volume 11, read on.

In this blog, I’ll discuss some considerations regarding operationalizing, automating, and increasing the efficacy of IP allow lists in your cloud infrastructure. Although this discussion will be in the context of cloud infrastructure providers such as AWS, GCP, and Azure, it should also be applicable to other cloud infrastructure and application environments.

What are IP Allow Lists?

IP allow lists are conceptually simple: we have a list of CIDRs, we compare incoming traffic or requests against this list, and allow it if it matches. In classic networking scenarios, the incoming traffic would be evaluated against network filters such as firewall/router rules at layers 3 and 4.

With the cloud it’s a little more complicated, not in its core definition, but more so how it relates to cloud concepts and services. We are still talking about TCP/IP, but the application areas of allow lists can involve more than just network ACLs or firewall rules applied to VPCs/subnets. They might apply to security groups protecting compute instances, console access, or API access over https. There are often specific resources or API activity that is restricted, and the IP allow lists might be associated with or applied at a larger boundary or organization level, as well as at a granular resource level.

To discuss operationalizing IP allow lists in the cloud, I’d like to touch on four basic areas or stages:

- Policy definition

- Implementation

- Configuration drift

- Monitoring / logging

Traditionally, implementation receives a lot of attention, and I’d like to give thought to the other stages, which can make maintenance and effectiveness of an IP allow list approach more feasible.

IP allow lists can be useful as an additional security layer for mitigating compromised credentials when combined with other controls, such as MFA, as we’ve discussed in previous blogs. For a cloud example of this scenario, organizations such as Netflix have applied this to AWS EC2 instances and temporary tokens to help mitigate compromised token scenarios.

Policy definition: Gaining internal agreement and alignment

One area that I think is underemphasized is what I call policy definition of the IP allow list. Fancy jargon aside, there needs to be agreement on how and where to actually specify what amounts to a security policy: a list of IPv4 CIDR ranges that reflect approved or authorized source IPs for the cloud resources that are being accessed. With that there are several areas to consider and plan for:

- Format: We need to determine the format of the CIDR list. This is as simple as agreeing on something like a CSV format or perhaps it’s a .json format utilized by the cloud service provider being used. The reason this is important, is that the rest of the operational workflow may need to parse or write this same format, yet it should be clear and concise for the human administrator so it can be maintained easily.

- Master copy: Rather than every administrator having a copy and no one knowing where the latest version is, it behooves each organization to think about where to store it. The last thing any organization needs is to question which IP allow list “should” be in production.

First preference would be to use the corporate standard for source control, e.g. GitHub. However, even an agreed-upon directory on a backed-up, shared file system with file versioning would be acceptable.

- Change management: The next decision is to decide how much of a change management control and change auditing is desired. Advantages of a source control system include: versioning, comments, audit trail, and access control features.

- Maintenance: Finally, IP allow lists can become quite large, and being able to maintain the list by removing outdated or inaccurate CIDRs is important. We’ll discuss this more in the Monitoring / Auditing section.

Implementation

This tends to be the focus for many people, and it certainly is important to be able to implement allow lists effectively, within the cloud environment you have. There are often multiple areas where IP allow lists can be applied, so examples below will use some of the more common ones.

1.Static CIDRs:

For IP allow lists to be maintainable, it’s important to try to stabilize the list of CIDRs as much as possible. In a post-COVID world, there are likely more employees or workers who work from home, in higher numbers. Trying to adjust for all the dynamic IP ranges from home ISPs, will make IP allow lists a nightmare that includes FPs and FNs and angers users.

Rather than going down the path of trying to maintain a large list of dynamic, consumer IPs, VPNs have been used in the past to restrict the allowed CIDR ranges to a smaller set of static, corporate egress points. A VPN requires users to effectively connect through well-known networks and egress IPs in order to access cloud infrastructure. Cloud VPNs or Zero Trust Network Access (ZTNA) solutions (e.g. Netskope ZTNA Next) help in greatly reducing the cloud resources that need to be reached from the public internet. Those access methods themselves may employ IP allow lists but the maintenance problem is much smaller.

Today, the cloud/Internet also offers other approaches, including the advent of efficient CASBs, lightweight steering agents, secure web gateways, and other proxies (e.g., Netskope Next Gen SWG), it’s feasible to require the remote worker to first go through corporate networking before accessing cloud applications. This means that the cloud infrastructure side can implement IP allow lists that have only the corporate list of CIDRs, instead of including home IP addresses of remote workers.

The work here could be significant with a large organization, but reducing the public IP footprint of client access is important not just to implement an IP allow list control, but overall for reducing the work with general security controls.

Assuming, this has been optimized as much as possible, let’s look at how the implementation is across cloud service providers like AWS, GCP, and Azure.

2. GCP

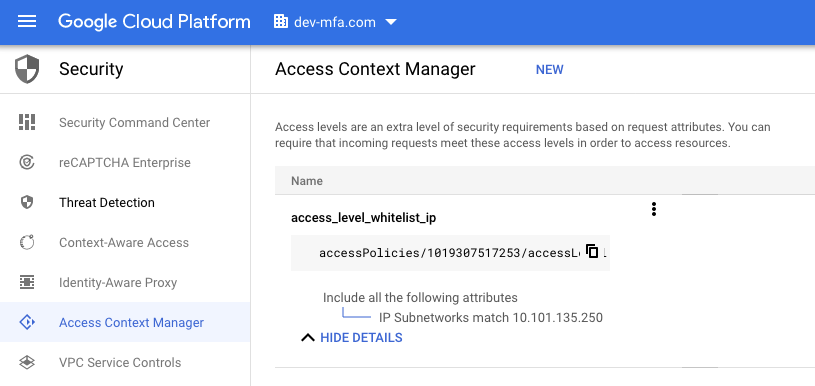

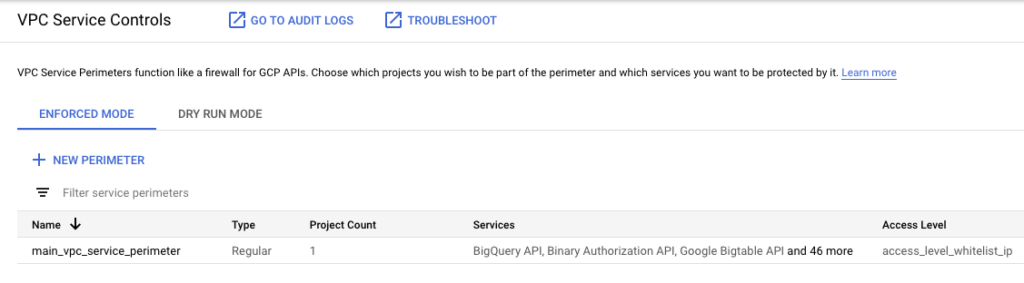

IP allow listing should be implemented with VPC Service Controls found in Google Cloud Console > Security > VPC Service Controls, using an access level based on IP address defined in Google Cloud Console > Security > Access Context Manager.

When implemented, users attempting to call the specified APIs from a non-authorized source IP will get an access denied error:

another-host:~ $ gsutil ls -l gs://bucket-foo-dev-mfa

AccessDeniedException: 403 Request is prohibited by organization's policy. vpcServiceControlsUniqueIdentifier: 93a9ce90174ce407

another-host:~3. AWS

IP allow lists can be implemented at the network or for EC2 instances, but we’ll discuss using IAM policies, which are effective for allowing traffic for authenticated users against specific resources with flexible conditions.

An example policy might look like this:

{

"Version": "2012-10-17",

"Statement": {

"Effect": "Deny",

"Action": "*",

"Resource": "*",

"Condition": {

"NotIpAddress": {

"aws:SourceIp": [

"192.0.2.0/24",

"203.0.113.0/24"

]

},

"Bool": {"aws:ViaAWSService": "false"}

}

}

}Note that this is a Deny statement denying access to API requests where the source IP is not in the list of CIDRs and where it is not a service using the user’s credentials. The last condition, aws:ViaAWSService, is important here so that we don’t have to worry about including IP addresses from Amazon’s own services. This is where implementation can become more complicated than standard networking IP allow lists—it depends on the expected source IPs seen at this enforcement point in this cloud provider.

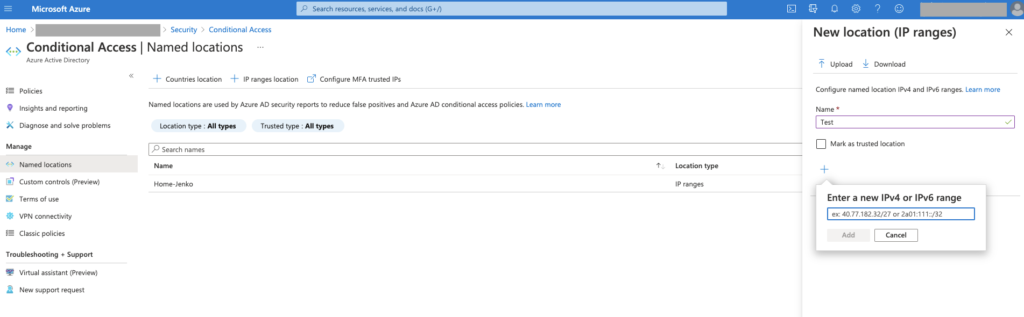

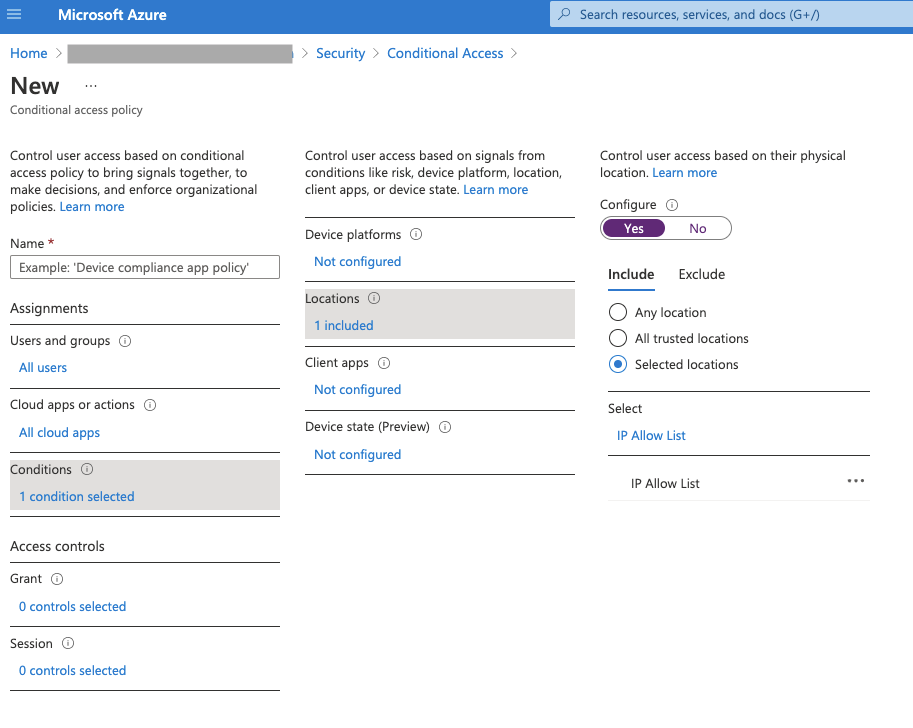

4. Azure

In the Azure Console, you can set policies with Conditional Access to implement IP allow lists to any or all users/groups, for any/all cloud applications. In Azure Console > Azure Active Directory > Security > Conditional Access, you can create locations based on CIDR ranges, and create policies to allow access from those ranges.

With all cloud providers, the implementation methods shown above are much more powerful than just checking on IP addresses, which raises an important point. Although this blog is discussing a narrower topic of IP allow lists, from a security viewpoint, you should be considering more complete policy enforcement. As an example, preventing compromised credentials should leverage MFA, possibly device characteristics (only “managed” devices are allowed access). The “conditions” capabilities in the above methods are powerful enough to accommodate such checks.

Configuration drift

Let’s assume we’ve implemented IP allowlisting in our cloud environment. There’s a tendency (after testing), to move on. I’d suggest considering implementing an automated, recurring check to make sure that the actual list of CIDRs deployed in the IP allow list in production is the expected version i.e. catch unauthorized, unapproved, or unexpected changes directly in production that bypass change management.

Here are some areas to consider with implementing audit checks to detect configuration drift:

1. Compare vs. master copy

To know if your configuration has changed unexpectedly, we obviously need to know what it should be. Here is where the discussion of policy definition, specifically having a master copy in a well-known location, is crucial. We need to know the “latest” approved version. This is also why having the ability to audit versions of that file including who made the last modification, and why, is crucial. Using a source control system like Git can make this a lot easier. Worst case, having an agreed-upon, secure file share location can suffice but it will be difficult to track down the who/why if there is configuration drift.

2. Implementation

For implementation of the actual check, one has several options. You can build your own:

- Retrieve the latest approved version i.e. the master copy

- Use the CLI or SDK of the cloud provider to retrieve the policy definition

- If necessary, convert it into the same format as used in your master copy

- Do a diff between the two versions, and if any differences, perform appropriate actions (examples below)

Alternatively, the cloud providers also have solutions such as AWS Config, as well as commercial offerings such as Netskope.

Whatever your desired solution, it’s important to automate this as a recurring job running daily or even more frequently depending upon security/compliance requirements. Using the native cloud job schedulers, possibly with a serverless function, would be a natural way to implement this.

3. Actions

If a difference is detected, then we certainly want to log and alert about the change.

Some other considerations to evaluate in your DIY, native, or third-party implementation:

- Context: Consider providing as much context as possible in the logs and the alert so that the configuration drift can be assessed and remediated as quickly and accurately as possible. Specifically, include timestamps, file version numbers, a contextual diff (show exactly which CIDRs are new/deleted/modified), user principals/accounts involved in the change, change comments, ticket numbers, and anything that helps identify the who, what, when, and why of the change.

- Remediation: If it is an unapproved action that requires rolling back to the last valid version, you want to ensure that this can be done quickly and reliably. The last known approved version (master copy) must be retrieved, then applied into production, and then tested.

Ideally, this is done with normal processes (e.g. change management tracking) but if an exception process is used, make sure that you don’t get echo effects with a storm of alerts from the configuration drift checks.

Although manual playbooks with a few console clicks or CLI commands are ok, a pre-tested script with arguments may be both quicker and less error-prone. This also allows for both manual actions as well as automated actions (where a strict production policy might be that any production policy change not performed via change management rules will be automatically rolled back).

- Usage activity: Consider if you can implement additional context around usage activity if an unauthorized change added or enlarged a CIDR range.

This is more complicated to implement, but the point is that if an unauthorized CIDR was added at time X, followed by actual access from that CIDR afterward, it would be useful to have the actual src IP or any other information from access logs such as the user principal/account doing the access. This would help the follow-on investigation or help determine if it’s an actual incident.

This would imply having API access to search the logs, so it’s dependent upon the logging used, whether native or commercial solutions such as SIEM vendors. The search might take time, so alternatives could be creating a script to do this search and having the alert send out the exact command-line with appropriate arguments to execute a search so that it’s done on demand but very easy for the recipient of the alert.

Monitoring/logging

Denies: Monitoring and logging are two different capabilities with different use cases. It’s worth considering what the value is of real-time monitoring or logging around access activity with respect to the IP allow lists. Specifically, should you care about detecting and logging blocked traffic or denies?

Detection: For detection of suspicious activity (remember we started with a scenario of possible credential compromise), this can be really noisy with a lot of FPs, so real-time monitoring of denies may not be that useful, but this should still be reviewed. You may expect a subset of users and environments to be under strict controls and tight adherence to policies with low traffic, so that anything, including a failed access, is worthy of a real-time alert.

Forensics: For forensics, logging should be done in as much granularity as possible. Although logging is normally an obvious point, logging of API calls in the cloud can be complex, as there are issues of which events are logged, whether activity using temporary credentials is logged with reference to the real account associated with the credentials, etc. Fortunately, since we’re talking about IP allow lists, some of this is simpler, but you still want to ensure that logging is configured correctly to capture the information you need. Choose a storage option that fits cost and access needs and is secure from tampering (read-only, object versioning, integrity checksums, etc.).

Behavioral analysis: Logging can serve as a useful dataset for behavioral analysis. Even if you do not have that implemented, having the data readily available is a big win.

IP allow list maintenance: There are other useful analytics that can be done on the usage activity, including identifying unused CIDRs blocks (e.g. no access in the past year) that when combined with the number of users and number of accesses, can help identify unused CIDRs that can be reviewed/culled from the list. One could perform this analysis periodically and manually using indexed searches, or it could be optimized with a serverless function that logs in a small cloud RDBMS table the last successful access from an IP and the associated allow list CIDR. This state table then is a simple query away from showing unused CIDRs to be reviewed.

Training: On a periodic audit basis (quarterly), looking at anomalies such as the top-N users who keep getting denied (they forget to connect to the VPN or proxy) may be useful for training of users.

Auto-generation of Filters/Scripts: Consider automation of the generation of scripts or event filters used for searches, if they are specific to the IP allow list policy definition. In this way, any changes in the IP allow list can automatically update what’s needed for implementing monitoring against that IP allow list or for performing searches on logs with respect to the IP allow list activity.

All of the above are just considerations or options and what makes sense or is worth it, is certainly dependent on priorities and needs in your organization.

Conclusion

In this blog, we’ve touched on a broader set of issues related to an old concept, IP allow lists, including:

- Policy definition

- Implementation

- Configuration drift

- Monitoring/logging

How much to do in each area, if anything, is up to you. However, it’s worth reviewing some of the considerations in each area so that your implementations are explicitly planned out even if they are simplified.

Hopefully, you have a simple framework that can be reused in not just IP allow lists but other security policies you might apply in the cloud.

Back

Back

ブログを読む

ブログを読む