Introduction

Best practices for securing an AWS environment have been well-documented and generally accepted, such as AWS’s guidance. However, organizations may still find it challenging on how to begin applying this guidance to their specific environments.

- Which controls should be applied out-of-the-box vs. customized?

- What pitfalls exist in implementing the various controls or checks?

- How do you prioritize remediation of the “sea of red” violations?

In this blog series, we’ll analyze anonymized data from Netskope customers that include security settings of 650,000 entities from 1,143 AWS accounts across several hundred organizations. We’ll look at the configuration from the perspective of the best practices, see what’s commonly occurring in the real world, and:

- Discuss specific risk areas that should be prioritized

- Identify underlying root causes and potential pitfalls

- Focus on practical guidance for applying the Benchmark to your specific environment

This blog post focuses on IAM security controls related to bucket storage. Based on the Netskope dataset analyzed, we will highlight two opportunities to improve security:

- Remove Public Buckets: Review public S3 buckets and set Block Public Access. Over 58% of buckets do not have Block Public Access set.

- Encryption: Encrypt S3 buckets and EBS volumes and enforce HTTPS transfers for S3 bucket access. More than 40% of S3 buckets and EBS volumes are not encrypted at rest, and more than 88% of buckets allow unencrypted access using HTTP.

Storage

Four controls related to storage best practices are:

- Ensure that your Amazon S3 buckets are not publicly accessible

- Enforce encryption of data in transit from S3 buckets

- Set server-side data encryption for S3 buckets

- Set server-side data encryption for EBS volumes

In our dataset, we looked at configuration settings for 26,228 buckets and 132,912 volumes across 1,143 accounts, with the following findings:

| # | Best Practice | # Violations | % |

|---|---|---|---|

| 1 | Ensure that your Amazon S3 buckets are not publicly accessible | 15,293 | 58.3 |

| 2 | Enforce encryption of data in transit from S3 buckets | 23,143 | 88.2 |

| 3 | Set server-side data encryption for S3 buckets | 1,162 | 44.3 |

| 4 | Set server-side data encryption for EBS volumes | 59,877 | 45.0 |

1. Public S3 Buckets

Background: Access to data residing on S3 buckets can be complicated since permissions can be specified in different ways with object ACLs, bucket ACLs, bucket policies, and IAM user policies. In some cases, if a bucket is used to serve public content e.g. for a web server, then public access (to anonymous or any authenticated user) is expected. However, in many cases, publicly accessible data is unintended and is a common cause of data loss.

AWS provides a setting, “Block Public Access”, which when set, ensures that the bucket is private by ignoring any public ACLs or public policies. Further, it prevents changing of bucket/object ACLs and bucket policies to make it public. Conversely, when it is disabled, then any public ACLs and policies will take effect and authorized users are also able to change the bucket ACLs and policies. Note that it is possible for a bucket to have “Block Public Access” disabled and not actually have any public ACLs or policies — in this case, the bucket is “potentially public.” New buckets have ”Block Public Access” set by default.

Data: 15,293 (58.3%) of the S3 buckets in this dataset do not have “Block Public Access” enabled.

Analysis:

We will look further into the data to answer two questions:

- Because a bucket has “Block Public Access” disabled, it does not mean the bucket actually has exposed data publicly, but it means the bucket can be potentially configured to be public. What subset of buckets actually have data exposed publicly vs. potentially public?

- Of those buckets that are public, are any expected to be public (e.g. a web server)?

- Public vs Potentially Public Buckets

To answer the first question, we will break down which of the 15,293 buckets that have “Block Public Access” disabled to see which of those buckets actually have public bucket policies or bucket ACLs. If the bucket has public ACLs or public policies, then it is categorized as “public.” If they do not, then the bucket is categorized as “potentially public.” In evaluating “public,” we follow the AWS definition of “public” as described in: Blocking public access to your Amazon S3 storage.Description Notes # Buckets % Block Public Access is not set 15,293 100 Potentially Public These buckets do not have public bucket policies or bucket ACLs. 14,714 96 Public These buckets have public bucket policies or ACLs 579 4

The high majority of the buckets (14,714 out of 15,293 or 96%) are “potentially public,” i.e. do not have “Block Public Access” set and do not yet have any public bucket policies or ACLs. These buckets can easily be made public by users with permissions to change bucket ACLs or policies. These buckets should be reviewed and if they are meant to be private, then the “Block Public Access” should be enabled.

Essentially, 4% of the 15,293 buckets are at high-risk, while the other 96% are potentially at risk. This would guide prioritization for remediation. - Unexpected vs Expected Public Buckets

Of the 579 buckets above that are public, some are public because of their purpose, such as being a public web server. The buckets that are not providing web content should be reviewed to see if they should be changed to private. This table breaks down the 579 public buckets into web-servers and non-web servers based:Description Notes # Bucket % Public Buckets 579 100 Web Server (Static Website These buckets either set CORS headers or respond with http status code 200 to a HTTP GET request of the bucket's static website endpoint. 242 42 Non-Web Server These buckets have public bucket policies or ACLs 337 58

We found that 337 or 58% of the 579 public buckets are not static websites. These buckets should be reviewed to determine whether they need to be public or not, and if not, changed to private by setting the Block Public Access to true.

Controls:

- Detection/Audit

Auditing public buckets can be done using several methods:- CLI: The configuration settings for each bucket including Public Access Block, ACLs, and policies can be spot-checked in the AWS Console or CLI:

aws s3api get-public-access-blockaws s3api get-bucket-policyaws s3api get-bucket-acl

Note: that the public access block can be set at a bucket or account level, and that individual objects can be set with their own ACLs. - AWS Access Analyzer for S3 can provide in-depth findings on public buckets and allows easy remediation

- AWS Config also has three rules that can detect buckets with public access:

- CLI: The configuration settings for each bucket including Public Access Block, ACLs, and policies can be spot-checked in the AWS Console or CLI:

- Prevention/Mitigation

- Public Access Block: The best prevention for unexpected public buckets is to apply the Public Access Block to private buckets, which will ensure that they are not only private but will prevent them from accidentally being changed to public by blocking modification of the bucket policies or ACLs.

The Console and CLI provide easy ways to set the Public Access Block setting:aws s3api put-public-access-block - Public Accounts: If possible, isolate public buckets in certain accounts with public resources and set the Public Access Block at the account level and cross-account access as necessary from other AWS accounts. In this way, the accounts become clear administrative and security boundaries and are easier to maintain and track public resources.

- Tags: Having a standard tagging system will also help in identifying, tracking, and searching for public buckets. When public buckets are provisioned, they are tagged with a well known tag. The tags become easy auditable checks to identify expected vs unexpected public buckets.

- Public Access Block: The best prevention for unexpected public buckets is to apply the Public Access Block to private buckets, which will ensure that they are not only private but will prevent them from accidentally being changed to public by blocking modification of the bucket policies or ACLs.

2. S3 Encryption-in-transit

Background: Encryption of data transfers to and from S3 buckets helps protect against man-in-the-middle attacks and supports data compliance regulations. Encryption of data transfers occurs if we can force all methods including API/CLI access or web access of the bucket to always use https.

HTTPS data transfers can be enforced by setting a bucket policy with a deny statement if an AWS condition, aws:SecureTransport, is false:

{

"Id": "ExamplePolicy",

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowSSLRequestsOnly",

"Action": "s3:*",

"Effect": "Deny",

"Resource": [

"arn:aws:s3:::DOC-EXAMPLE-BUCKET",

"arn:aws:s3:::DOC-EXAMPLE-BUCKET/*"

],

"Condition": {

"Bool": {

"aws:SecureTransport": "false"

}

},

"Principal": "*"

}

]

}

You want to make sure that this is a Deny statement with a match on false, as explained in: What S3 bucket policy should I use to comply with the AWS Config rule s3-bucket-ssl-requests-only?

Data: 23,143 (88%) of the S3 buckets in this dataset do not have encryption-in-transit enabled, which means enforcement of https for data transfer.

Analysis: The high majority of 88% indicates that this policy is rarely enforced in the buckets in this dataset. Since this policy is easy to implement and otherwise transparent to the user, organizations should immediately enforce encryption of data transfers in order to achieve a higher level of security and compliance.

Controls:

- Detection/Audit

- The best way to prevent unencrypted data in transit, is to have regular, automated checks on all bucket policies to ensure that the appropriate SecureTransport condition check exists for each bucket utilizing one of the Detection/Audit methods.

- Bucket policies can be audited through the CLI:

aws s3api get-bucket-policy

- Prevention/Mitigation

- Bucket policies can be set through the Console or CLI:

aws s3api put-bucket-policy

- Bucket policies can be set through the Console or CLI:

3. S3 Encryption-at-rest

Background:

Most compliance/control frameworks specify that default server-side encryption should be enabled for data storage. Encryption of S3 bucket data at rest can help limit unauthorized access and mitigate impact of data loss. There are several server-side encryption approaches available that can be set by default and have different trade-offs in terms of changes required by users/clients, security, and costs:

- SSE-S3: Server-Side Encryption with Customer Master Keys (CMKs) provided by and managed by AWS (SSE-S3)

- SSE-KMS: Server-Side Encryption with CMKs provided by:

- AWS or

- Customer

managed by AWS in the Key Management Service.

- SSE-C: Server-Side Encryption with CMKs provided by and managed by Customer

Here are some of the key differences:

| Method | Master Key provided by | Master Key managed by | Work involved | Cost | Security Implications |

|---|---|---|---|---|---|

| 1. SSE-S3 | AWS | AWS | Low - configure bucket encryption | No additional charges | - Transparent to authorized bucket users - Any authorized user has access - Better for compliance |

| 2a. SSE-KMS (AWS) | AWS | AWS | Moderate - configure bucket encryption - specify AWS CMK id in API calls | Some additional charges e.g. 10K uploads and 2M downloads per month might cost ~$7/mo. | - Helps mitigate over-privileged access i.e. if user doesn't have access to key in KMS, can't access - audit trail of CMK use |

| 2b. SSE-KMS (Customer) | Customer | AWS | Moderate - configure bucket encryption - provision CMK - specify customer CMK id in API calls | Some additional charges e.g. 10K uploads and 2M downloads per month might cost ~$7/mo. | - Helps mitigate over-privileged access i.e. if user doesn't have access to key in KMS, can't access - audit trail of CMK use |

| 3. SSE-C | Customer | Customer | Higher - configure bucket encryption - provision CMK - pass customer CMK in API calls - storage/management of CMK | No additional charges | - Mitigates over-privileged access with tighter controls on CMK i.e. if user doesn't have the actual CMK, can't access. |

Additional details on trade-offs and guidance on setting and enforcing server-side encryption are described in: Changing your Amazon S3 encryption from S3-Managed to AWS KMS.

Data: 11,621 (44%) S3 buckets in this dataset do not have any encryption enabled.

Analysis: Almost half of the S3 buckets do not encrypt data at rest. At a minimum, for compliance reasons, AWS-managed server-side encryption should be enabled as it’s free and transparent to users.

Controls:

- Detection/Audit

- Bucket policies can be audited through the CLI:

aws s3api get-bucket-encryption

- AWS Config can also detect S3 buckets that do not have server-side encryption enabled.

- Bucket policies can be audited through the CLI:

- Prevention/Mitigation

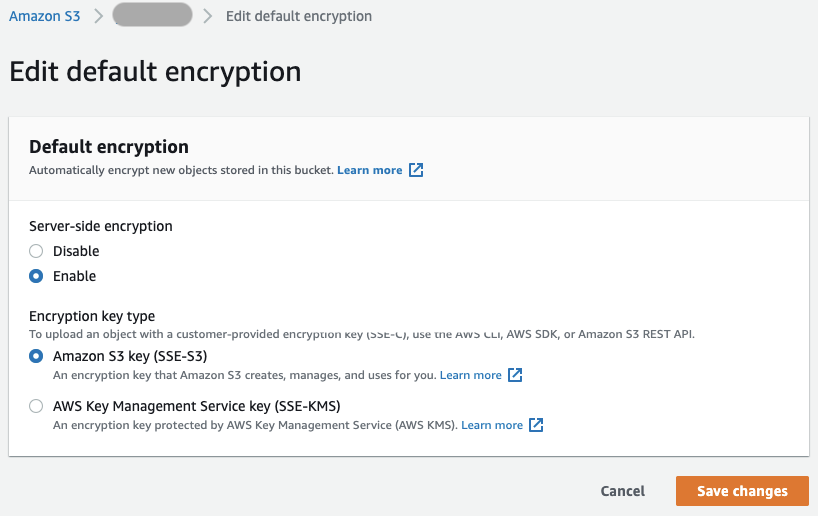

- For S3 buckets, you can select one of the two available encryption types in the Console:

- Encryption can also be set using the CLI:

aws s3api put-bucket-encryption --server-side-encryption-configuration '{"Rules":[{"ApplyServerSideEncryptionByDefault":{"SSEAlgorithm":"AES256"}}]}'aws s3api put-bucket-encryption --server-side-encryption-configuration '{"Rules":[{"ApplyServerSideEncryptionByDefault":{"SSEAlgorithm":"aws:kms","KMSMasterKeyID": "arn:aws:kms:...:key/<key_id>"}}]}'

- For S3 buckets, you can select one of the two available encryption types in the Console:

4. EBS Volume Encryption-at-rest

Background:

Encryption of EBS volumes helps support compliance controls around data privacy.

Additional best practices for EBS encryption can be found here: Must-know best practices for Amazon EBS encryption.

Data: 45% of the EBS volumes in this dataset do not have encryption enabled. For compliance purposes, EBS encryption can be set by default to protect data at rest, with either AWS or customer-managed master keys.

Analysis: Similar to S3 buckets, about 44% of EBS volumes of EC2 instances are unencrypted. EBS volumes can be encrypted by default using AWS or customer-managed keys. Since the AWS encryption is free and easy, customers should at a minimum enable this setting.

Controls:

- Detection/Audit

- To prevent unencrypted EBS volumes, a regular and automated check on the default settings can be done, using the detection/mitigation check within a regular scheduled job.

- The CLI can be used to check the current default EBS encryption settings:

aws ec2 get-ebs-encryption-by-default - AWS Config can also detect when EBS encryption is not enabled by default.

- Prevention/Mitigation

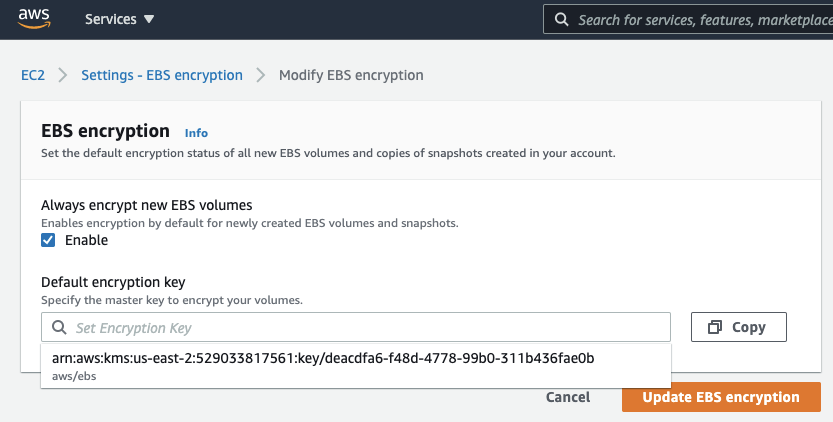

- Default EBS encryption can be set using the Console:

- To set the default EBS encryption master key via the CLI, you can:

aws ec2 enable-ebs-encryption-by-defaultaws ec2 modify-ebs-default-kms-key-id [ --kms-key-id <KMSKeyId> ]

Note if you do not supply a KMSKeyId, the AWS-managed CMK will be used.

- Default EBS encryption can be set using the Console:

Conclusion

The CIS Foundation Benchmark for AWS provides specific guidance on auditing and remediating your configurations in these areas. Here are some basic measures that can be done to address some of the common risk areas due to storage or network configuration in your AWS environment:

- Data Encryption: Encrypt S3 buckets and EBS volumes, using the default encryption options provided by AWS. Create a bucket policy to enforce HTTPS transfers for S3 bucket access.

- Public Buckets: Review all public S3 buckets and set Block Public Access for those that do not need public access.

Providing your own checks using API or CLI scripts within jobs can be done or in some cases AWS tools will help, such as with Access Analyzer for S3 bucket access checks. In other cases, for ease of maintenance and scalability, products such as Netskope’s Public Cloud Security platform can automatically perform the detection and audit checks mentioned in this blog.

Dataset and Methodology

Time Period: Data was sampled/analyzed from January 24, 2021.

Source: The analysis presented in this blog post is based on anonymized usage data collected by the Netskope Security Cloud platform relating to a subset of Netskope customers with prior authorization.

Data Scope: The data included 1,143 AWS accounts and several hundred organizations.

The data was composed of configuration settings across tens of thousands of AWS entities including IAM users, IAM policies, password policy, buckets, databases, CloudTrail logs, compute instances, and security groups.

Logic: The analysis followed the logic of core root account security checks found in best practices regarding AWS configuration settings.

Back

Back

ブログを読む

ブログを読む