The pace of innovation in generative AI has been nothing short of explosive. What began with users experimenting with public apps like ChatGPT has rapidly evolved into widespread enterprise adoption. AI features are now seamlessly embedded into everyday business tools, such as customer service platforms like Gladly, HR software like Lattice, and even social media networks like LinkedIn.

Meanwhile, many organizations are taking things further by building their own AI applications, powered by open-access models, such as DeepSeek and LLaMA, and supported by communities like Hugging Face that enable seamless sharing of models and data. Over the past year, the number of organizations running local genAI infrastructure has skyrocketed from less than 1% to over 50%.

But as innovation accelerates, so do the risks. We are already familiar with shadow IT and data loss being a top concern for the use of public genAI applications. Netskope Threat Labs reports that shadow IT drives 72% of genAI app usage, often involving the upload of sensitive data like source code, regulated information, and intellectual property. And while just 5% of users interact directly with standalone genAI apps, more than 75% are using enterprise tools with embedded genAI capabilities. With the addition of organizations now deploying local models, the risk landscape continues to evolve, introducing new challenges around data access, model governance, and operational oversight.

This moment represents more than just a shift in productivity; it’s a turning point for security leaders. The question isn’t if you’ll embrace AI, but how you’ll secure its adoption.

Take charge of your AI journey with Netskope One

Managing AI risk today means understanding your evolving AI landscape and enforcing granular policies that secure your entire AI ecosystem end-to-end, everywhere. Netskope One offers a suite of Netskope’s industry-leading security service edge (SSE) capabilities to secure users, agents, data, and applications, across your enterprise.

With the Netskope One platform, powered by the full range of SkopeAI innovations, you’re not just securing your AI journey; you’re mastering it. Here’s what that looks like in action:

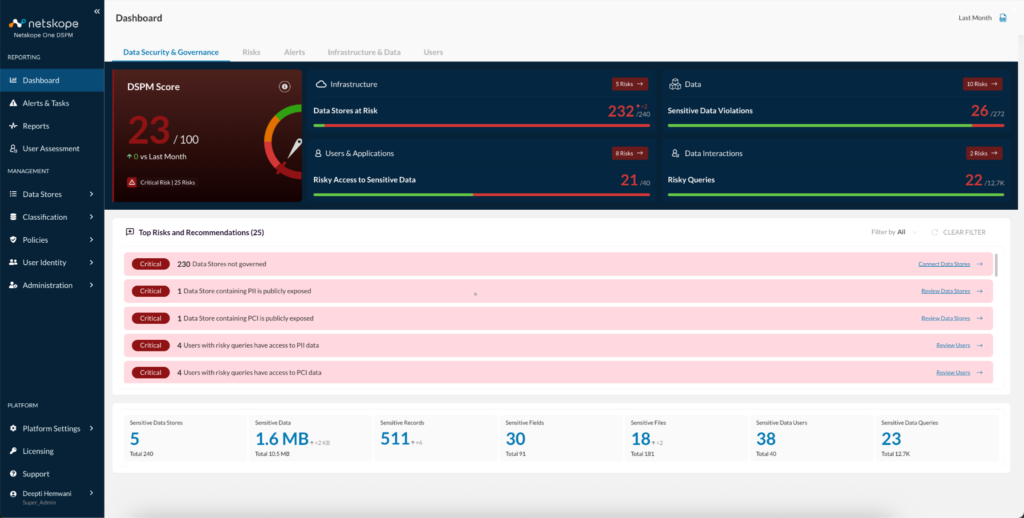

Achieve AI readiness with a proactive understanding of your data and applications

Achieving true AI readiness starts with a proactive understanding of your data and applications. With Netskope One DSPM, your organization can automatically discover, classify, and label both structured and unstructured data across various data stores, such as AWS S3, GCP BigQuery, Azure Blob Storage, and PostgreSQL. This visibility empowers teams to set data exposure policies before AI models ever touch sensitive content, preventing confidential data from being used to train public or private LLMs. For example, a financial services firm can ensure its enterprise genAI tools are not trained on highly sensitive financial data, avoiding unintended exposure. This kind of foresight and control is what enables organizations to be truly AI-ready.

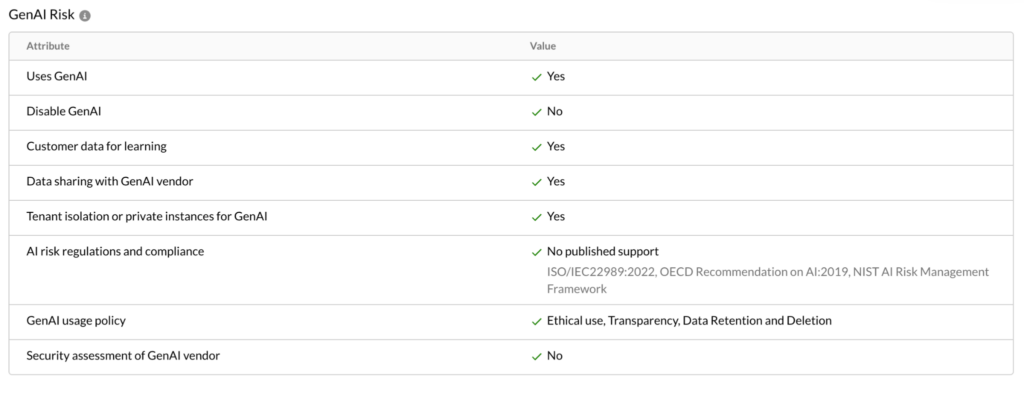

For a deep understanding of your applications, the Netskope Cloud Confidence Index (CCI) provides rich risk context, empowering teams to make informed decisions about which AI-enabled apps to allow, restrict, or block. This is especially critical when it comes to protecting intellectual property, source code, or regulated data. Netskope identifies and classifies risk for more than 82,000 public applications. Whether it’s a dedicated genAI tool or a traditional Saas application enhanced with genAI features, Netskope One provides you with detailed insights on risk characteristics, including if they use customer data for training, share data with third-parties, meet specific compliance requirements, and more, to help you make informed decisions about their use in your environment.

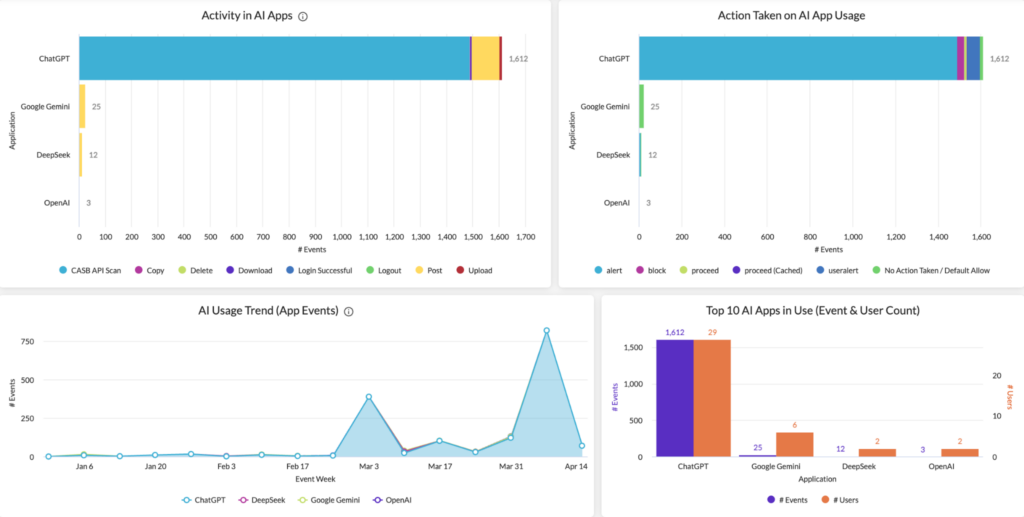

Gain complete visibility into your AI landscape

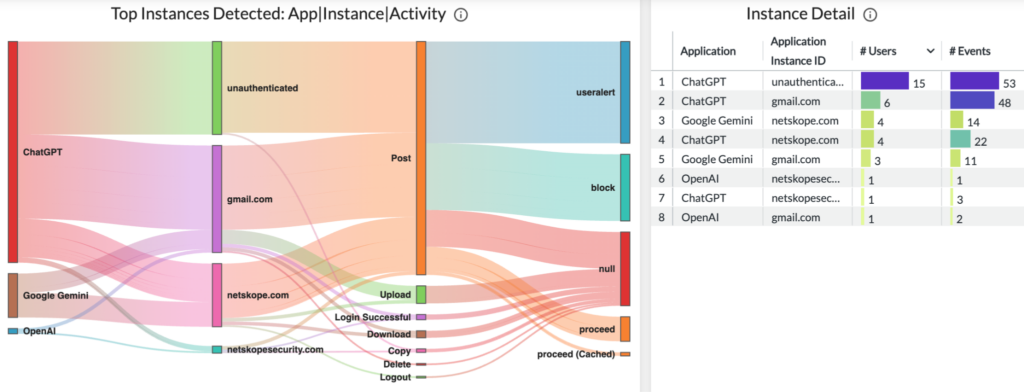

Visibility is the foundation of responsible AI adoption. As security teams set and continuously evaluate their AI policies, they need to understand how users are interacting with AI and which tools are being used. With Netskope One, your AI initiatives are empowered by the full context of AI interactions within your organization. The Netskope One platform provides comprehensive visibility into both managed and unmanaged genAI SaaS applications, helping you tackle the challenge of shadow IT. On the AI dashboard, view trends of AI activity, top AI applications used, actions taken within those applications, and more.

Whether you’re monitoring employee access to genAI models, or identifying data leakage between personal and corporate genAI applications, Netskope’s granular visibility enables you to proactively manage risks. With this in-depth insight, you can move beyond blind trust to gain strategic control over your AI activities, ensuring secure deployment of AI across your organization.

Enforce contextual protection, securing every user and agent, everywhere

In the fast-evolving world of AI, security must be dynamic and adaptable. With full context from risk assessments, inline and API-based protections, and posture checks, Netskope’s unified security policies seamlessly govern user (and soon agent) interactions, enabling precise, granular control. With advanced data loss prevention (DLP) capabilities, Netskope helps safeguard sensitive information from exfiltration across public SaaS apps like ChatGPT, Microsoft Copilot, and Google Gemini. This means preventing unauthorized data access, monitoring AI interactions to ensure policy compliance, and detecting and mitigating emerging threats in real time. While many vendors focus solely on securing user access to AI tools, Netskope goes a step further by offering visibility and protection across the full AI lifecycle, including how data is used, shared, and learned from. Whether it’s securing AI-enhanced collaboration tools or protecting data flowing through a private LLM, Netskope provides the broadest protection available for enterprise apps and data, from public clouds to private environments.

Next Steps

To learn more about how Netskope One delivers the visibility and control needed to secure your entire AI ecosystem, visit our Securing AI page.

Back

Back

Read the blog

Read the blog