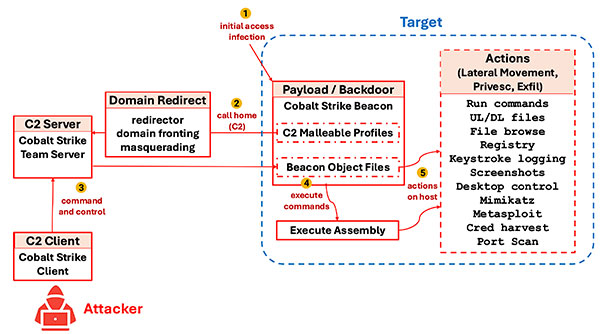

Attackers are adding new and sophisticated command-and-control (C2) capabilities in their malware that easily evade common static defenses based on IPS signatures or IP/domain/url block lists by using common, widely-available C2 framework tools like Cobalt Strike, Brute Ratel, Mythic, Metasploit, Sliver, and Merlin. These tools provide post-exploitation capabilities including command-and-control, privilege escalation, and actions on host and were originally designed for penetration testing and red team operations.

However, attackers have hijacked and embedded these same toolkits for malicious purposes as many products are open-source, such as Mythic and Merlin, while other commercial products, such as Cobalt Strike and Brute Ratel, have been stolen by attackers through hacked copies or leaked source code. This has effectively turned these same tools into adversarial C2 frameworks for malicious post-exploitation.

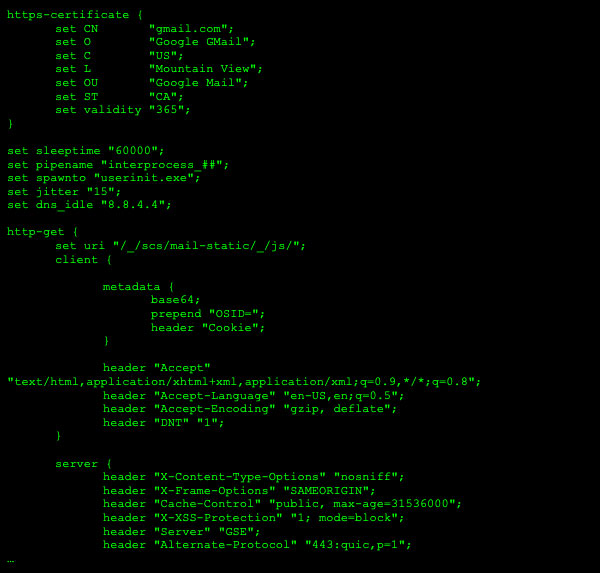

The tools can easily shape and change many parameters of C2 communications, enabling malware to evade current defenses even more easily and for longer periods, causing greater damage within victim networks, including: stealing more data, discovery of more valuable data, unavailability of business apps/services, and maintaining hidden access to networks for future damage.

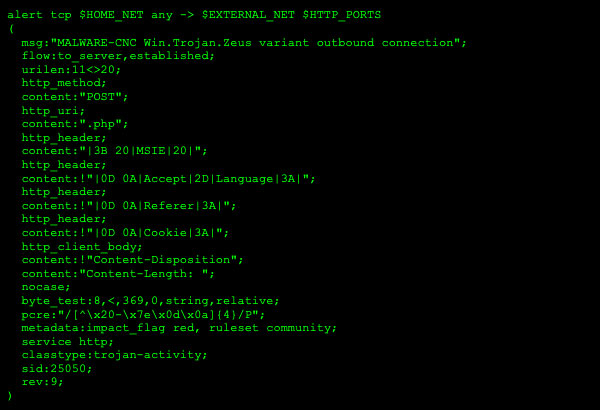

Current approaches to detecting the latest malware using C2 frameworks utilize static signatures and indicators including detection of implant executables, IPS signatures for detection of C2 traffic, and IP/URL filters that are inadequate to dealing with the dynamic, malleable profiles of the widely-available C2 framework tools.

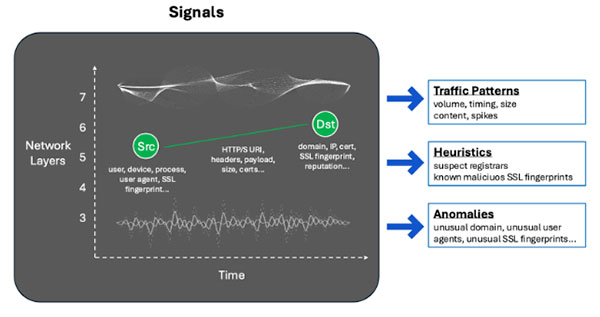

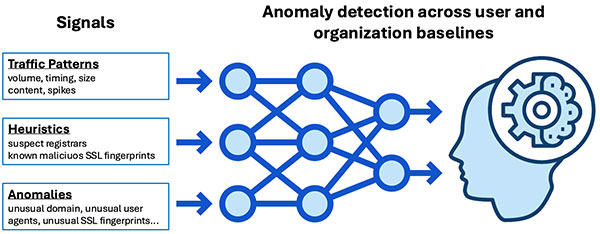

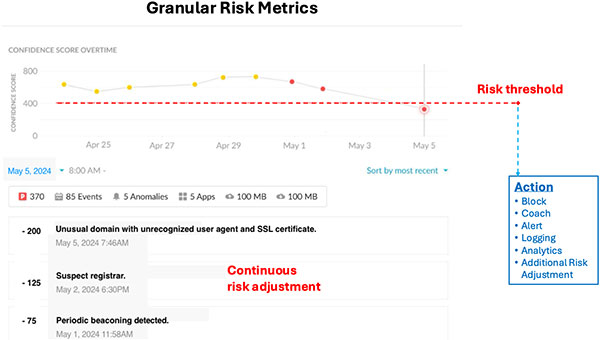

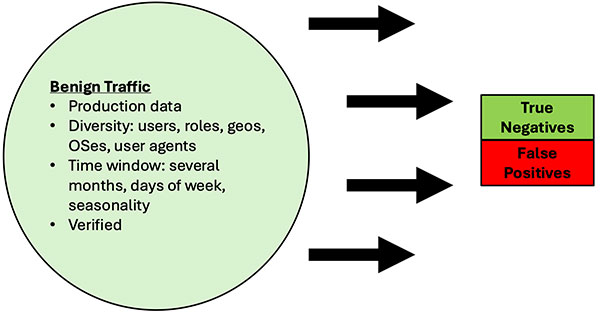

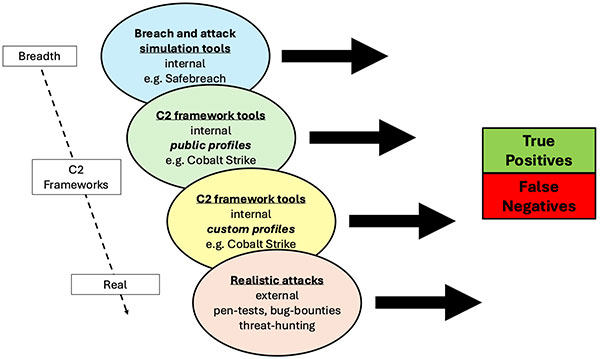

A new approach is required that is not so rigidly tied to known attacks, but based on anomaly detection of a comprehensive set of signals fed into trained machine-learning models with fine-grained tracking of device and user risk. This approach will supplement existing approaches, but can dramatically increase detection rates while keeping false positives low and future-proofing against evolving C2 traffic patterns that are easily enabled by these same C2 framework tools.

This paper discusses the gaps in current approaches and the increased efficacy from using a focused machine-learning approach with additional network signals and fine-grained risk metrics based on models at the user and organization level. We also discuss some of the key challenges in testing the efficacy of any C2 beacon detection solution.

Back

Back