This year’s Cloud and Threat Report on AI apps focuses specifically on genAI application trends and risks, as genAI use has been rapidly growing with broad reach and wide impact on enterprise users. 96% of organizations surveyed have users using genAI with the number of users tripling over the past 12 months. The real-world use of genAI apps includes help with coding, writing assistance, creating presentations, and video and image generation. These use cases present data security challenges, specifically how to prevent sensitive data, such as source code, regulated data, and intellectual property, from being sent to unapproved apps.

We start this report off by looking at usage trends of genAI applications, then we analyze the broad-based risks introduced by the use of genAI, discuss specific controls that are effective and may help organizations improve in the face of incomplete data or new threat areas, and end with a look at future trends and implications.

Based on millions of anonymized user activities, genAI app usage has gone through significant changes from June 2023 to June 2024:

- Virtually all organizations now use genAI applications, with use increasing from 74% to 96% of organizations over the past year.

- GenAI adoption is rapidly growing and has yet to reach a steady state. The average organization uses more than three times the number of genAI apps and has nearly three times the number of users actively using those apps than one year ago.

- Data risk is top of mind for early adopters of genAI apps, with proprietary source code sharing with genAI apps accounting for 46% of all data policy violations.

- Adoption of security controls to safely enable genAI apps is on the rise, with more than three-quarters of organizations using block/allow policies, DLP, live user coaching, and other controls to enable genAI apps while safeguarding data.

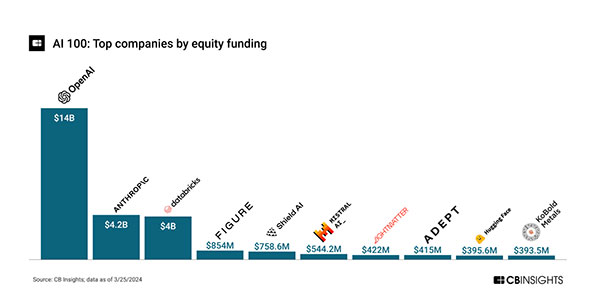

AI in general has been popular and attracted considerable investment, with funding totaling over $28B across 240+ equity deals from 2020 through 3/22/2024.[1]

With OpenAI and Anthropic totaling nearly two-thirds (64%) of the total funding, AI funding is dominated and driven by genAI. This reflects the increasing genAI excitement since OpenAI’s ChatGPT release in November 2022. In addition to startups, multiple AI-focused ETFs and mutual funds have been created, indicating another level of funding from public market investors. The large amount of investment will provide a tailwind for research and development, product releases, and associated risks and abuses.

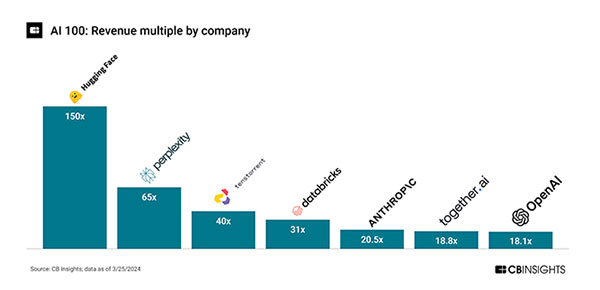

Outsized price-to-sales ratios indicate that execution is lagging lofty investor expectations. Hugging Face has a 150x multiple of a $4.5B valuation on $30M revenues and Perplexity a 65x multiple of $520M valuation on $8M revenues[1]:

Although real revenue is lagging, product release activity is high, indicating that we are still early in the AI innovation cycle with heavy R&D investment. As an example, there have been 34 feature releases (minor and major) of ChatGPT since Nov 2022[2], or approximately two per month.

It’s clear that genAI will be the driver of AI investment in the short-term and will introduce the broadest risk and impact to enterprise users. It is or will be bundled by default on major application, search, and device platforms, with use cases such as search, copy-editing, style/tone adjustment, and content creation. The primary risk stems from the data users send to the apps, including data loss, unintentional sharing of confidential information, and inappropriate use of information (legal rights) from genAI services. Currently, text (LLMs) are used more, with their broader use cases, although genAI apps for video, images, and other media are also a factor.

This report summarizes genAI usage and trends based on anonymized customer data over the past 12 months, detailing application use, user actions, risk areas, and early controls while providing prescriptive guidance for the next 12 months.

Back

Back